I’ve loved bridges ever since I was little.

On a hot summer day, I would jump off the smol one in my neighborhood into the pond.

On that bridge, I would re-enact my favorite scenes from Monty Python with my imaginary friends.

So it’s no surprise that in my twenty-seventh year of living, I’ve become absolutely enamored with bridges. But this time, they’re cRyPtO bRiDGeS.

A Primer

Have you ever been hiking and tried going across a river, instead of using the bridge? I have, and I can tell you that it sucks.

Like their real world counterparts, cryptocurrency bridges (and their fancier cousins: “interoperability protocols” and “generalized messaging layers”) deliver the same value.

They connect blockchains together. Without bridges, moving from one chain to another is a painful user experience (Bitcoin to Ethereum via Binance) to physically impossible (Filecoin to [insert Polkadot parachain that isn’t DotSama]).

But crypto bridges do more than just connect. They also allow for translation of language and rules between blockchains.

Without that translation, it’d be like if you crossed over an IRL bridge and, on the other side, everyone is speaking in 💧︎◻︎♋︎■︎♓︎⬧︎♒︎, left is right, right is backwards, gravity is inverted, the sun is bleeding — you get my point.

You’d be pretty damn confused, right? That’s how data packets feel when you drop them into a new blockchain. They’d be so lost that they would just sit there and do nothing. Poor data packets :(

Bridges enable data to be transmitted and interpreted between disparate environments. They allow for interoperability between chains.

“But is the future even multi-chain, Jim?” you nasally ask.

Um, yes.

The genie is out of the bottle.

There must and will always be multiple blockchains — multiple zones of activity from Cosmos to Avalanche to Aptos to Ethereum. If nothing else, people’s bags insist on it.

There’s no world where Ethereum is the sole global settlement layer and everything is all neatly rolled up on top of it — despite being a much simpler future state to grok.

And these blockchains need a communication layer in order to coordinate settlement. Or else, they’ll be isolated from one another forever.

The people want multi-chain and the people love bridges.

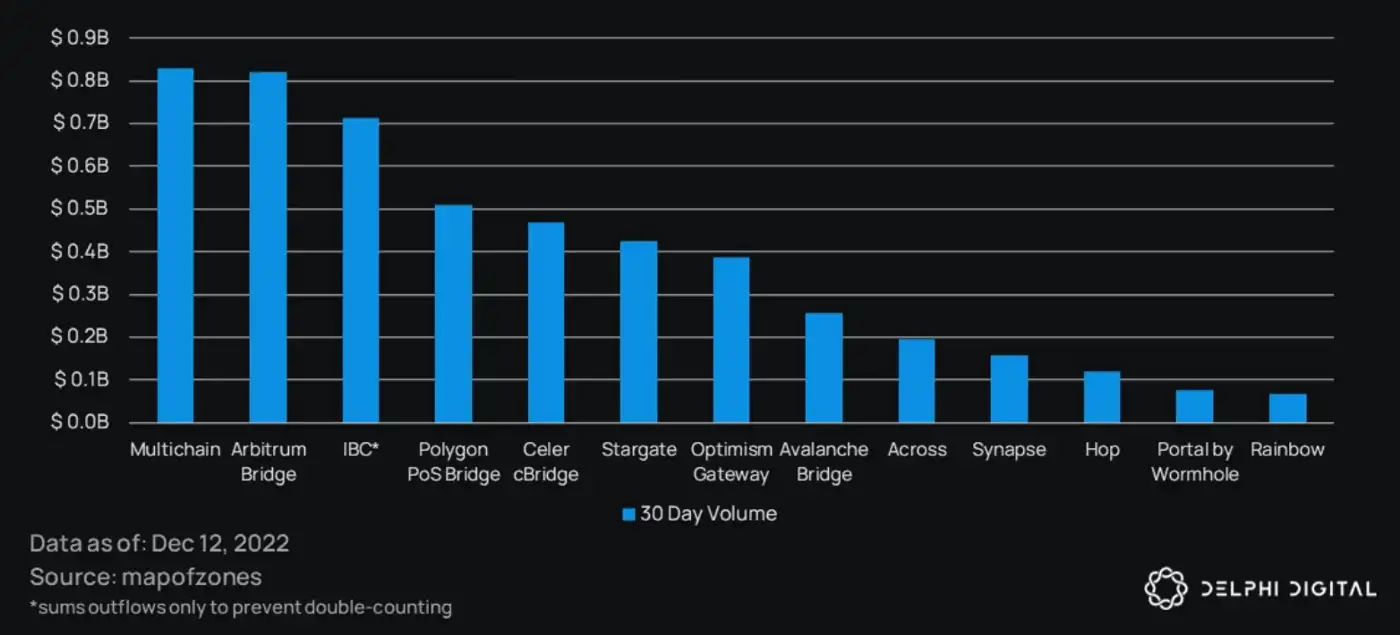

In the past 30 days alone, ~$5B of value has been transmitted on bridges. Moreover, bridge companies are getting H E L L A love from investors.

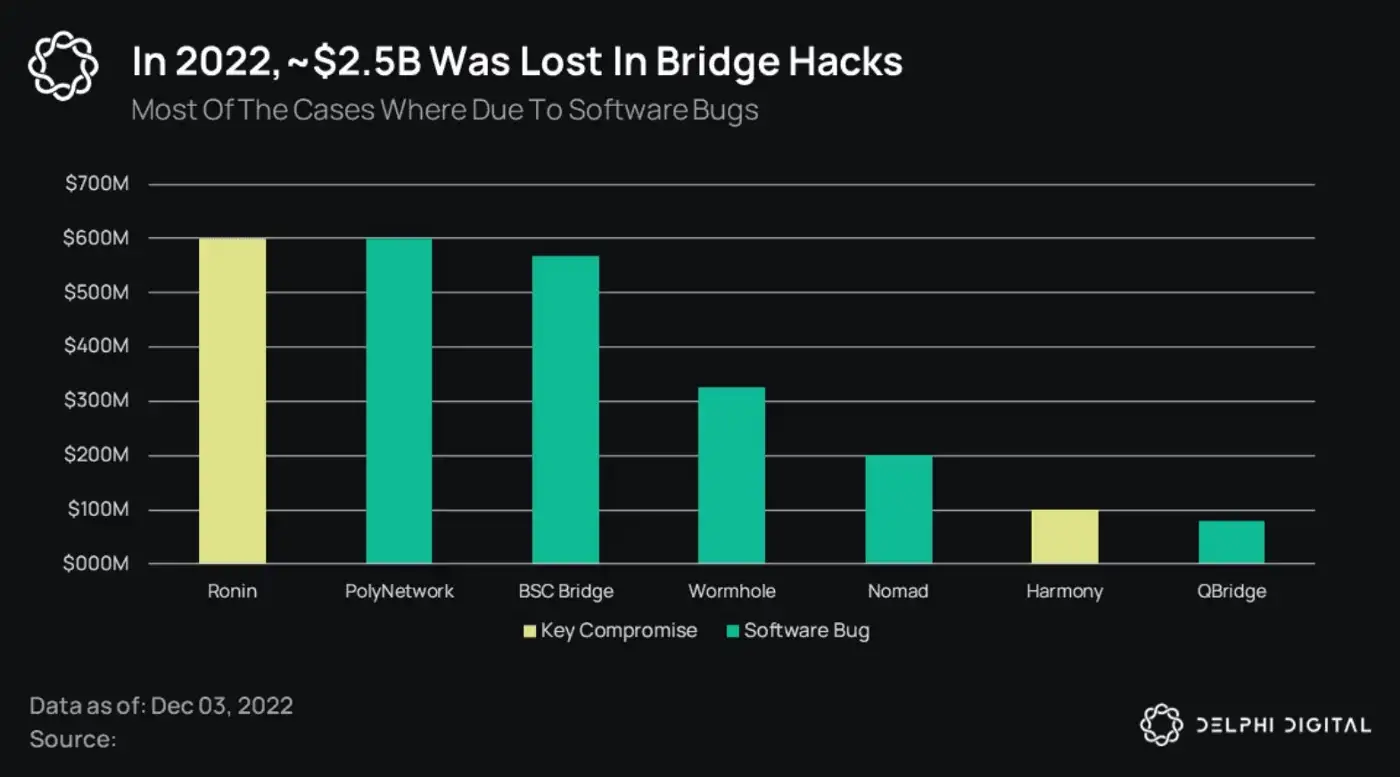

Sadly, this overflowing market love also means that bridges are a prime candidate for crypto’s favorite pasttime: exploits.

Over $2 billion of exploits have occurred in the past year — either due to an exploit of core mechanism design or a smart contract bug.

The Holy Grail

How would I define the “ideal” bridge?

No need to memorize another trilemma framework, I think the idea bridge is the one that is maximally secure.

After a long year in crypto, safe and sound mechanism design is needed in order to restore user confidence.

The most secure bridge design is the one that is the most trust-minimized, which is a fancy way of saying that the bridge inherits the security properties of the two chains it’s connecting.

This is done through on-chain verification — where the destination chain (i.e., the chain receiving the bridged transaction) verifies the consensus of the origin chain and sees that the specified transaction was indeed included in their blockchain.

This is typically accomplished by the destination chain’s validators running an on-chain light client of the origin chain. The light client checks the submitted merkle root and see that — yes! — the specified transaction has indeed been signed by the origin chain’s active validator set. Then, the destination chain’s validators can confirm the transaction’s validity and move to include the transaction on their blockchain.

If that sounded like complete technical jargon, I’ll put it a different way.

Let’s say it’s Friday night and you’re out with a group of friends (lucky). Someone who you’re not particularly close with texts your friend, inviting you all to come to this insane rave in deep Bushwick (yes, we’re assuming in this hypothetical scenario that you are currently in New York — Chelsea to be precise).

Should you go? You’re all bored, but also very skeptical of the legitimacy of this person’s claim. What if this person is lying (or has a low bar for parties) and you all waste time and money going all the way over to Bushwick?

You remember that this rave is actually being livestreamed on Instagram. You all hurriedly open your phones and see — indeed — this rave is INSANE!

You and your friends are the destination chain validators.

The person’s text message is the transaction.

The rave is the origin chain.

The livestreamed Instagram Story is… the light client?

Eh — close enough.

The Faustian Bargain

“OK Jim, sounds easy enough. Let’s just have all chains implement on-chain light clients!” you exclaim as you read this between your McDonald’s shifts.

If only it were that easy.

Running an on-chain light client is complicated and computationally intensive (i.e., expensive). It’s not scalable — as every validator is required to run a light client for each origin chain. It’s difficult to expand to new chains, and quite literally impossible for some — as different consensus signature schemes aren’t supported in all execution environments (e.g., Ethereum verifying Tendermint consensus).

To date, only IBC on Cosmos app-chains have implemented on-chain light clients at scale (Gravity Bridge, Rainbow Bridge, Composable Finance, and Snowbridge are tough to scale).

This feat was only achieved through extreme standardization — where every app-chain runs Tendermint consensus and adheres to the IBC standards.

In a world of many chains of differing consensus mechanisms, signature schemes, and virtual machines, having on-chain light client verification is impossible 🥹

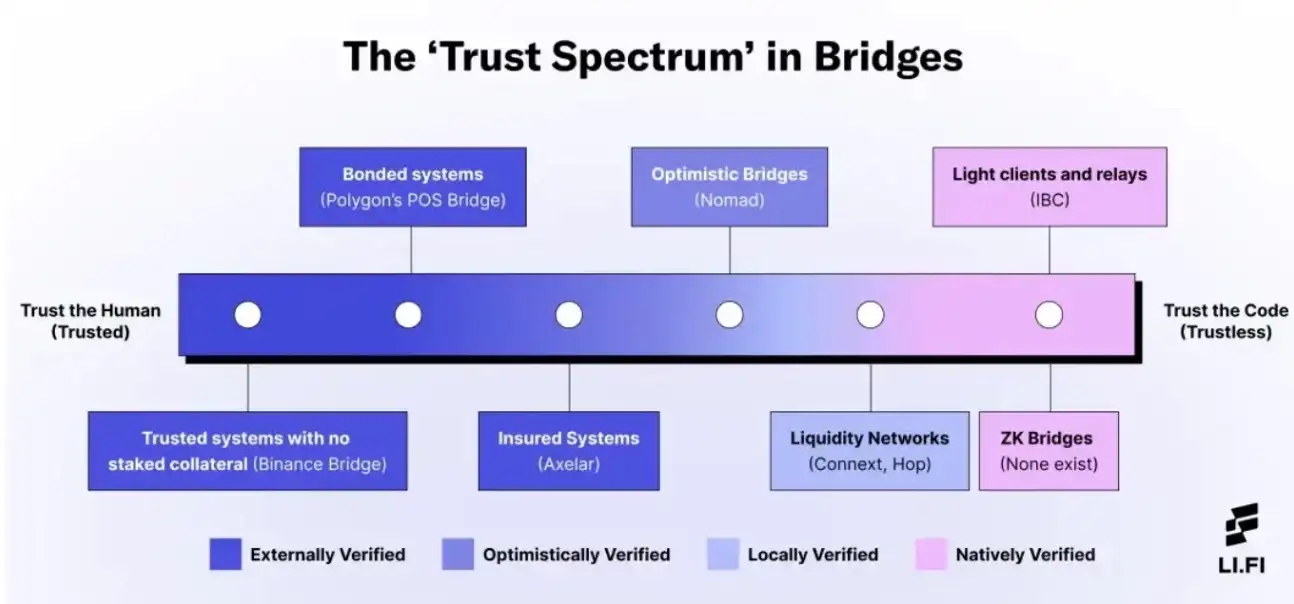

So a tradeoff must be made: how many trust assumptions need to be made in order to gain feasibility/scalability?

And here lies the deal with the devil that so many bridge projects have made — creating the so-called trust spectrum, depending on the magnitude of tradeoffs made.

Off-chain verification

OK so if on-chain light client verification isn’t possible, then the only possible design space is doing so off-chain.

This is where protocols vary in their implementation.

On one end, you have Team Human — Multichain, Wormhole, Ronin Bridge (among others). These are the multi-signature implementations that require entities to verify transactions, and to attest (i.e., sign) to that validity. After a threshold is passed, the transaction is considered verified.

These implementations require entities (think businesses or really famous people) to run full nodes (beefier than light clients) in order to do the verification. Of course — these humans can still lie, but the assumption is that majority rulez and entities wouldn’t rug their reputation by being dishonest.

Team Human’s nextdoor neighbor is Team Economics — Celer, Axelar, deBridge, Hyperlane, Thorchain (among others). These are similar to multisigs, but with an added layer of Proof of Stake. Now instead of trusting the entities (called validators here) to collectively sign on a transaction’s validity, there is economic incentive to not lie — or else a validator’s stake will be slashed.

These implementations also typically require validators to run full nodes of origin chains (but isn’t enforced), and are only as secure as the total economic stake. In theory, Team Economics can be more secure than the underlying blockchain they’re connecting (bridge stake > chain stake), but in practice, I’ve yet to see it happen.

Next up is Team Game Theory — LayerZero and optimistic bridges like Nomad and Synapse. These implementations break up bridging into two separate jobs and dis-incentivize coordination between the two job doers.

For LayerZero, they created the Ultra Light Node, which is essentially a just-in-time light client delivered on an as-needed basis. Oracles pass block headers, and relayers pass transaction proofs. The two together combined to perform the duties of an on-chain light client, but is less costly because they don’t run on a continuous basis.

Optimistic bridges also have two off-chain agents: an updater and a watcher (in Nomad’s terms). The updater passes the merkle root of an origin chain, and there’s a challenge period (e.g., 10 minutes) in which any watcher can challenge the validity of the merkle root passed. Erroneous updaters will have their stake slashed (yes, they’re also Team Economics).

In both implementations, an off-chain agent needs to run a full node in order to verify transactions. Also in both implementations, a collusion between the two agents can lead to an erroneous transaction being passed.

However, optimistic bridges require an honest minority assumption in order to correctly verify a transaction — meaning anyone (in theory) can be an agent, so there only needs to be one honest actor for the system to work.

For now, LayerZero has permissioned off-chain agents, relying on the trusted reputations of institutions for the system to work. Over time, LayerZero wants app-designated oracle-relayer pairs in order to dis-incentivize collusion (more on this later).

Finally, there’s Team Security — Datachain/LCP Network. They utilize a secure enclave (TEE) like Intel SGX in order to perform encrypted off-chain light client verification — what they called Light Client Proxy. Trust, in this instance, relies on the security of the TEE enclave, which can be compromised as previously seen by the Secret Network exploit in which all privacy for the network’s history was broken by obtaining the master decryption key from an SGX side-channel.

At the end of the day, someone or something needs to do the verification — whether it’s programmatically through an ecosystem of “off-chain agents” (as all the projects call them; e.g., watchers, oracles, updaters, relayers, validators, etc.) or even a manual check-the-chain from a hooman.

And the destination chain validators need to trust that the verification was done correctly — because there’s no way for them to verify themselves.

On-chain verification

What if there actually was a way to perform on-chain light client verification?

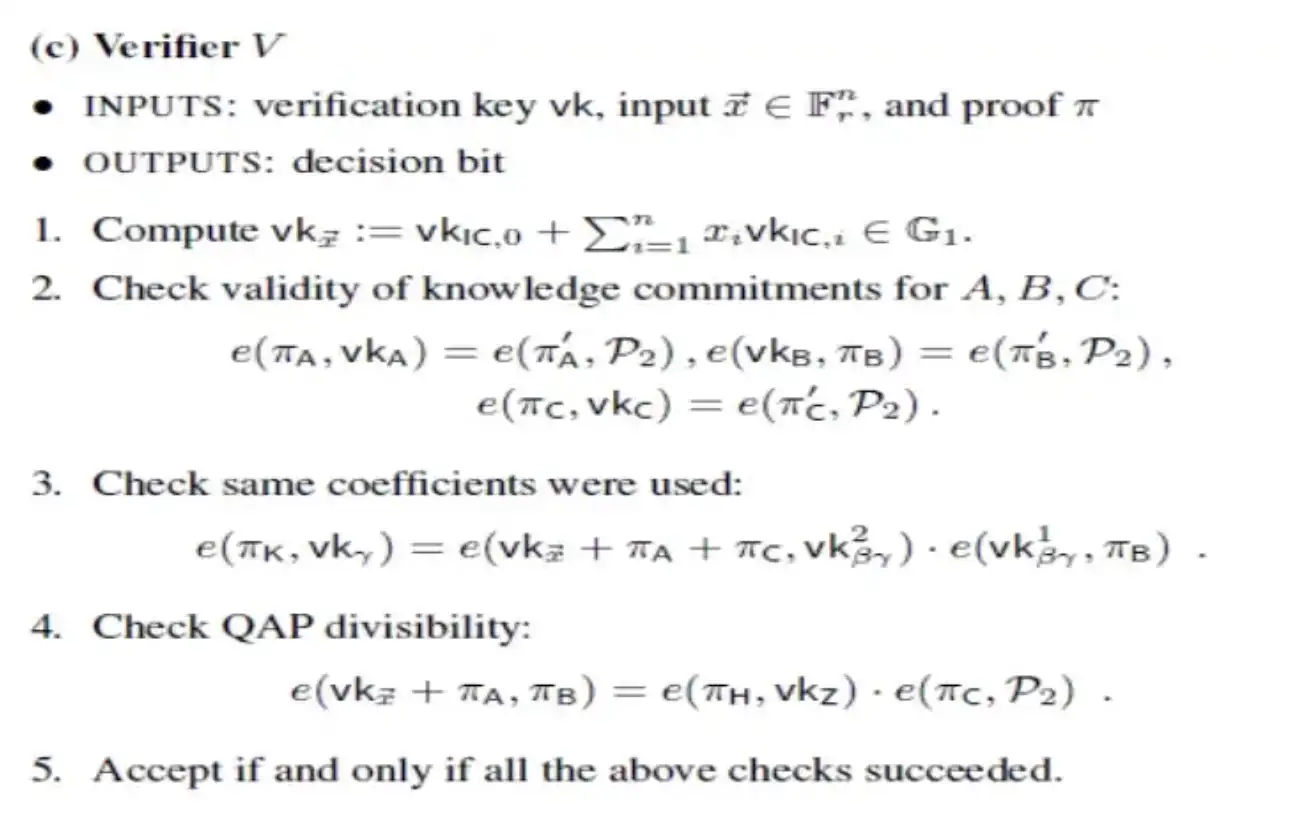

That’s where Team Math comes in — Polymer, Succinct, Electron Labs, and zkBridge. These projects are at the forefront of Zero Knowledge SNARK research, using succinct proofs in order to scale on-chain verification for bridging.

Technically, the verification of origin chains’ consensus (of the various signature schemes) is done off-chain. A SNARK proof is generated by an off-chain prover showing this verification has been performed, which is then proved on-chain on the destination chain.

And a SNARK can’t be wrong because… math.

If you like math and computer science, SNARKs are for you. Source: Vitalik blog

“Yoooo — we did it, Jim! This is the Holy Grail of bridging!” you text me while driving (please don’t).

Not so fast.

ZK light clients are a nascent technology that — for the moment — are limited in scope.

Namely, Succinct is live for a Ethereum-to-Gnosis bridge (bi-directional), Electron Labs is working on a Cosmos-to-Ethereum bridge (uni-directional), and Polymer is developing a network of light clients across L1s and L2s (omni-directional).

New circuits (i.e., computer science gigabrainery) are required in order to expand to other consensus mechanisms, and the work is hard.

Moreover, ZK light clients need deterministic finality — they can’t work with Proof of Work chains like Bitcoin. With that said, there are workarounds being developed, such as encoding non-determinism inside a circuit in the light client itself.

(And for the sake of exhaustiveness, I’ll add Rollup Bridges in Team Math, which prove every state transition and are even more trust-minimized than ZK light clients that prove consensus).

The Sobering Truth

Through my hundreds of hours of research into bridges, I’ve reached the conclusion that the answer to the “optimal” bridge is not straightforward — especially in the near and medium term.

This space is nascent. People are dogmatic about their beliefs about what will be the winning technological solution.

I ultimately lean towards a pragmatic approach. It will not be the best tech that wins, but the best product — built by a team that knows exactly what their users want (in this case, developers) and builds an experience and GTM in order to deliver value.

As someone who has cut their teeth building crypto applications, I know what app developers want. This is what I think a good bridge product may look like:

1. A hybrid approach of technologies

Technology itself is not the product — it enables the product. A mix-and-match of security models can create a best-of-both-worlds experience for users.

FWIW, I think Team Math is the right direction, but the effective scope is limited, and they trade off extensibility (i.e., ability to expand to new chains) for trust minimization.

An approach where Team Game Theory and Team Math join forces may be the all-star roster that we need.

Optimistic bridging for fulfilling the first leg of the transaction — with a challenge period and honest minority trust assumption. Trust-minimized ZK bridging for validated refunds on the return leg.

You can read more about this hybrid solution here.

Another approach is building a modular stack to slot in additional security models over time — like LayerZero, Connext, and Hyperlane — to be mixed and matched accordingly.

2. Another entity eats the finality risk

Finality is the name-of-the-game when it comes to bridges. Bridge protocols need to be absolutely sure that a transaction has occurred in the origin chain before passing it on to the destination chain.

There’s no way to perform a rollback cross-chain.

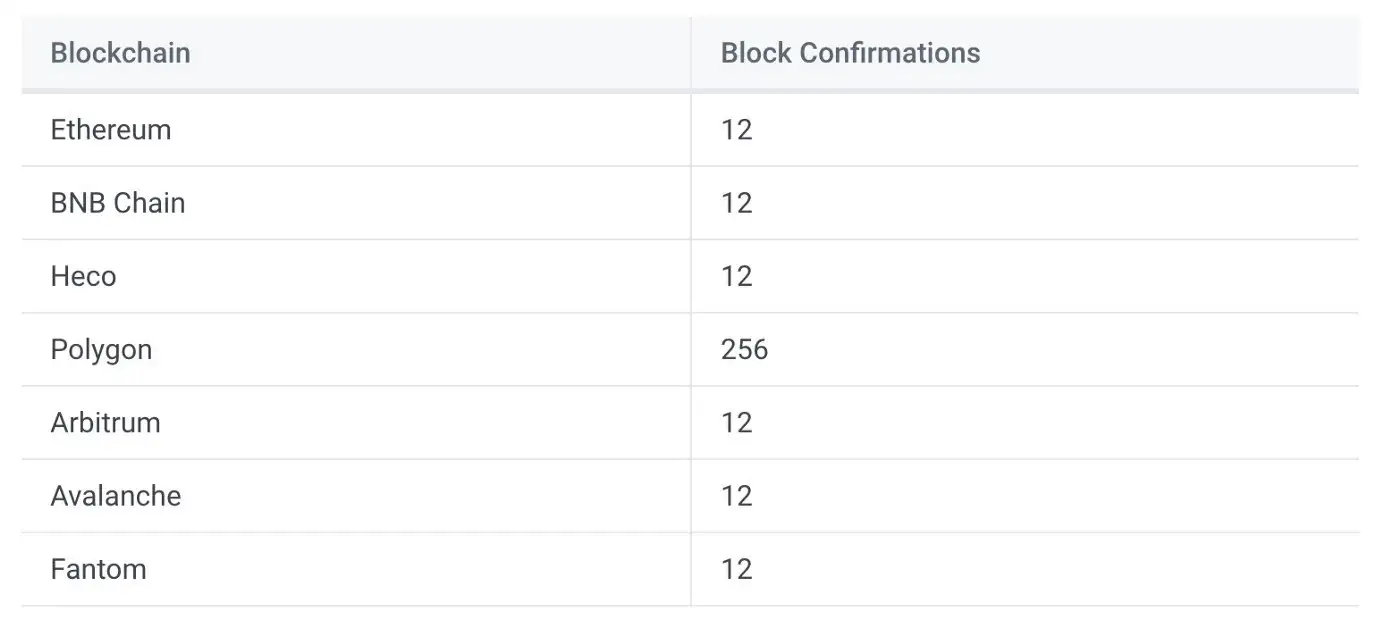

In instant finality chains like Cosmos app-chains built on Tendermint, this happens in a matter of seconds (i.e., one block). However, other chains take longer to reach finality, and some need a buffer period in order to account for the probabilistic/economic viability of a re-org.

In the case of sending a transaction from Ethereum, users can’t wait ~12 minutes in order to reach finality and have their transaction confirmed. For optimistic rollups, this is exacerbated as finality is technically achieved after the seven-day challenge period expires.

The latency of finality will need to be abstracted from users, and other players will need to take on the re-org/uncle block risk. Of course — players will need to be financially compensated by users for this service.

3. Configurable risk appetite

In addition to abstracting away latency, the variations of risk will need to be abstracted from users.

The application should have control over its risk threshold and have the threshold be customizable based on use case, amount, and destination chain. For example, an NFT transfer on BSC should be different than a liquidation on Cosmos.

LayerZero, Synapse, and Hyperlane are allowing applications to determine their risk appetite.

For LayerZero, applications can pick a relayer and oracle pair that they trust — as well as a timer for finality.

For Synapse, applications can choose their fraud window based on use case.

For Hyperlane, applications can even select their desired consensus mechanism (Team Human, Team Economics, Team Game Theory — called Interchain Security Modules).

This is where bridge aggregators like Socket and LIFI are also especially helpful because different bridge implementations can ultimately be configurable by the application as well.

4. BD matters.

OK, technically not a product but rather a team priority.

A war is being fought in the trenches that is bridging protocols. Every project is well capitalized heading into the bear, sopping up top talent, and innovating on the forefront of cryptography.

Yet there are no apps on top of them. Where are the apps?

To date, only token transfers protocols (Connext, Hop, Across, Stargate) have found success. The lauded use cases of NFT game asset marketplaces and cross-chain governance have not come to fruition (aside from DeFi Kingdoms).

This is not a “build it and they will come” space. Bridges are developer platforms and need vibrant app ecosystems on top of them.

Without apps, there are no users. And that’s why business development and integrations is crucial in order for a project to succeed.

At the end of the day, applications will follow the money. It may not be the technology that wins, but the relationships, the integrations, the business factors that determine the winner.

Bridge usage may follow power law for assets, chain connection, and use case.

5. Being upfront. It’s trust assumptions all the way down.

As mentioned before, trust is a spectrum. Even the most secure technologies are as trustless as it appears. I’ve learned this lesson time and time again during my over four years of working in crypto.

Bridges are only as strong as its weakest connecting chain (i.e., may be susceptible to a 51% attack). This is a huge deterrent to a future with multi-hop bridging.

Most smart contract implementations are mutable. Upgrades happen semi-regularly. There’s always someone/something with admin privileges. Hats off to the teams who ship immutable code (which has its own downsides let’s be frank).

A bridge that allows an application to configure its risk appetite, will also introduce an attack vector in which an attacker can infiltrate the application’s contracts and disable any verification process for a malevolent transaction.

Protocol disputes are resolved by governance or a multisig. Human error is introduced into the equation.

Even ZK bridges are not fully trust-minimized. There’s trust that the circuits are written correctly. Trust that the prover software has no bugs. Trust that the destination smart contract can interpret the circuit correctly. All things that the strength of a project’s brand, the Lindyness of the team, and slowly and methodically shipping secure code should ideally fix.

Being upfront and intellectually honest with developers will go a long way.

The Road Ahead

Phew.

It feels good to write down all my thoughts on bridges. I’ll end with a thought that couldn’t quite fit into the framework of the blog post.

I believe in the next 2–3 years, we’ll see an explosion in deployment of app-specific rollups.

There are SO many solutions positioned to service this explosion: OPStack, Arbitrum Nova+Nitro, Fuel, Polygon Supernets, Avalanche with their subnets, Scroll, Polygon Hermez, Consensys zkEVM, Starknet, zkSync, Celestia, Astria, Saga, Dymension, etc.

However, bridging seems to be the missing piece in all of these solutions.

How can we ensure app-rollup to app-rollup bridging? Companies like Slush, Constellation Labs, and Sovereign Labs (and tangentially LaGrange and Herodotus) are sort of thinking of this problem while creating their app-rollup SDK.

How can we have bridging out-of-the-box for these app-rollup-as-a-service solutions? Hyperlane is working on this, but it’s a hard problem to tackle.

How can we optimize multichain capital efficiency while removing bridge exploits without sacrificing user/dev experience?

These are the key questions that I’ll be tackling in my next endeavor (soonTM).

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Thank you for the many late night talks and all-day whiteboarding sessions with the Hyperlane, Succinct, LayerZero, Polymer, Geometry, and Sovereign Labs teams.

Thank you to the Variant, Andreesen, 1kx, and CoinFund teams for riffing with me on where the industry is moving.

Thank you to Aurelius from Synapse for pilling me earlier this year on the bridge space. Thank you to especially insightful calls with Nick Pai from Across, and Rob and Ceteris from Delphi.

Thank you to Peter, David, Yuan, Dmitriy, and Tina for reviewing my work. You all make me smarter every day.